Testing and Examinations

The community responsible for licensing occupations and professions is large and diverse. Among others, it includes agency administrators and other staff, board members, testing experts, attorneys and the candidates themselves. All of these groups need a solid basis in the fundamentals of a fair examinations process.

- CLEAR Exam Review

- Glossary of Regulatory Terminology

- Innovative Assessment Formats

- Evaluating Exam Providers

- Licensing Exam FAQs

- Regulator FAQs

- Polls Conducted by CLEAR's Examination Resources and Advisory Committee

- Webinar Recordings

- Podcast Episodes

CLEAR Exam Review

For over three decades, CLEAR’s Examination Resources and Advisory Committee members have shared their expertise with this community. The most enduring of these contributions is a semi-annual publication, CLEAR Exam Review. CLEAR Exam Review is a bi-annual journal with useful discussions of current licensing examination issues and is geared toward a general audience. CLEAR members receive CLEAR Exam Review free of charge. Nonmembers may purchase issues for $15.

Glossary of Regulatory Terminology

The glossary provides definitions for words and phrases commonly used in the field of professional and occupational regulation. By design it is limited in scope to broad definitions, and aims to assist meaningful conversations between international regulators. This project was initiated by the International Relations Committee of the Council on Licensure, Enforcement and Regulation (CLEAR), whose members include regulators from the United States, Canada, Qatar, New Zealand, Australia and the United Kingdom.

Suggest a new term or revised definition

Innovative Assessment Formats

This list of resources related to innovative assessment formats was developed for use by psychometricians involved in assessment formats. Some are new formats, while others focus on what is NOT a multiple-choice question. Other examples include a new twist to an older item type or assessment process, e.g., a constructed response or essay question evaluated by a computer grading system (a new approach) or a computerized drag-and-drop item format delivered with a new twist now called "drag and place.” As another example, Standardized Patients or OSCEs, while not exactly new formats, are being used in perhaps new, more precise ways with video-monitored testing centers.

Evaluating Exam Providers

This tool can be used to assist regulatory administrators in evaluating the performance of exam providers. The following questions/topics may be used by regulatory administrators to evaluate the performance of a testing company at the conclusion of a contract, periodically within a contract, or during the selection process for a new testing company.

Licensing Exam FAQs

This list of FAQs was compiled by CLEAR's Examination Resources and Advisory Committee. Expand a topic and click a question to see ERAC's response.

We have completed a job or practice analysis and used the results to develop test specifications for our examination program. How do we use this information to develop valid examinations?

Test takers want to have as much information as possible about their performance on an examination. Despite what information an organization provides, test takers generally will ask for more. How is information best provided, and what information should be included?

I would like a reference for a clear, practical application of test equating methodology. Is there a step-by-step application of equating to a sample data set, including formulas and equations?

We use a criterion-referenced methodology when establishing the passing score for our examination. The result from applying this methodology is that the actual passing score varies from examination to examination. What is the best way to explain the variation?

Regulator FAQs

This list of FAQs was compiled by CLEAR's Examination Resources and Advisory Committee. Expand a topic and click a question to see ERAC's response or suggested resources for further reading.

- CLEAR Podcast Series

- Episode 40: Testing through the Years – a CLEAR Exam Review Retrospective. Includes a conversation regarding the move towards remote proctoring and some of the issues involved.

- Episode 27: What Makes a Difference for Candidates Taking Computer-based Tests. Touches on remote testing and issues with device diversity.

- 2023 Annual Conference Presentation

- The Remote Proctoring Room Scan Decision: How Test Providers Can Protect Against Legal Challenges. Will discuss security measures and legality of those measures (e.g. room scans).

- 2022 Annual Conference Presentation

- Live Remote Proctoring: Considerations and Outcomes

- Migrating High Stakes Licensure Examinations to Online Proctoring: Challenges and Opportunities

- Journal Articles

- Silverman, S., Caines, A., Casey, C., Garcia de Hurtado, B., Riviere, J., Sintjago, A., & Vecchiola, C. (2021). What happens when you close the door on remote proctoring? Moving toward authentic assessments with a people-centered approach. To Improve the Academy: A Journal of Educational Development, 39(3).

- Cherry, G., O'Leary, M., Naumenko, O., Kuan, L. A., & Waters, L. (2021). Do outcomes from high stakes examinations taken in test centres and via live remote proctoring differ?. Computers and Education Open, 2, 100061.

- Weiner, J. A., & Henderson, D. (2022). Online remote proctored delivery of high stakes tests: Issues and research. Journal of Applied Testing Technology, 23, 1-4.

- Clear Licensing Exam FAQs – check out the answers in the previous FAQ section, particularly the answers to the following topics:

- Equating tests and use of scales scores

- Test construction and assembly

- Clear Licensing Exam FAQs – check out the answers in the previous FAQ section, particularly the answers to the following topic:

- Subject Matter Experts (SMEs)

- CLEAR Podcast Series

- Episode 35: The Role of Public Board Members. Discusses why it’s important to include public board members.

- Clear Licensing Exam FAQs– check out the answers in the previous FAQ section, particularly the answers to the following topic:

- Subject Matter Experts (SMEs)

- Should educators or board members serve as SMEs?

- Subject Matter Experts (SMEs)

- Clear Licensing Exam FAQs– check out the answers in the previous FAQ section, particularly the answers to the following topic:

- Test Validity

- Testing and Examination FAQs– check out the answers in the previous FAQ section, particularly the answers to the following topic:

- Multiple Choice Question and Performance Examination Item Types Question

- See ERAC publication, Development, Administration, Scoring and Reporting of Credentialing/Registration Examinations: Recommendations for Board Members > Developing Objectively Scored Examinations > Multiple-Choice Item Development – pp 5-7

- A RESOURCE BRIEF (2013), Regulators’ Role in Establishing Defensible Credentialing Programs by Steve Nettles, Ed.D. and Larry Fabrey, Ph.D., provides an overview of the process for developing a defensible credentialing program -describes the examination development process as a straightforward but complex process. Each step must be carefully executed and well documented. There is some discussion regarding the costs associated with doing a role delineation study.

- A RESOURCE BRIEF (2004) BUILDING AND MANAGING SMALL EXAMINATION PROGRAMS, By Roberta N. Chinn, Ph.D. Norman R. Hertz, Ph. D. and Barbara A. Showers, Ph.D.also provides an overview of the complexities associated with developing a small examination program. FAQ associated with the brief partially addresses the answer; FAQ “ Is it really necessary to hire a psychometrician? We’ve heard that they are very expensive. Some of our board members are experienced educators and believe that our board can get by without one”. It emphasizes that the development and maintenance of high stakes examinations requires training and experience in order to meet professional testing standards.

- pp.39-40 in Demystifying Occupational Regulation (Schmitt and Shimberg, 1996) explicitly discusses the problem with 70% cut scores. Can we pull out these two paragraphs of text as an answer to the Question (or at least part of the answer)?

- Glossary of Regulatory Terminology

- pp. 10-11 in ERAC publication, Development, Administration, Scoring and Reporting of Credentialing/Registration Examinations- explicitly discusses the danger of arbitrary cut points

- The 2006 CLEAR resource brief, Considerations in Setting Cut Scores by Roberta Chinn has a paragraph on ocial and political consequence of standards, and one on intended uses of cut scores that are relevant.

Section to be added

- This is part of the larger question of “Why have regulation”?

- Public protection purpose – set minimal standard for entry

- No guarantee of consistency in training or alignment to entry to practice requirements

- There is an on-target CLEAR Exam Review article by Chad Buckendahl (Winter 2017 Issue, pp. 23-27) titled, Clarifying the blurred lines between credentialing and education exams, that does a very good explaining the differences between educational and licensure assessments.

- See previous Testing and Examinations FAQ– What issues should be considered when translating or adapting an examination?

- The International Test Commission offers sets of guidelines to entities that intend to offer an examination in a second language.

- A RESOURCE BRIEF (2006), To Translate, or not Translate: That is the Question by Norman R. Hertz includes guidelines for test adaptation. Summary: “To ensure a valid second-language examination requires considerable time and funding and should not be undertaken without a thorough evaluation of the potential outcomes, both positive and negative. Once a decision has been made to adapt an examination and sufficient resources have been allocated, this article can assist the credentialing program to achieve a valid adapted examination that will determine an individual’s competency to practice in a safe and effective manner”.

- There is relevant information in the ERAC publication, Development, Administration, Scoring and Reporting of Credentialing/Registration Examinations: Recommendations for Board Members- Accommodating Candidates with Disabilities; pp. 13-14. The resource contains a specific example that addresses the question- Is it prudent for all licensure and certification agencies to abandon in-person proctoring/supervision and the environmental controls of a fixed test administration location in favor of the remote proctoring of tests?

- Clear Exam Review: Spring 2019, Perspectives on Testing: Responding to Regulator and Candidate Questions about Your Examination; Is your organization considering the use of remote proctoring? GRADY BARNHILL, M.Ed P 11-12.

- Testing and Examination FAQs – check out the answers in the previous FAQ section, particularly the answers to the following topic:

- Equating Tests and Use of Scaled Scores- provides background information that may be helpful in basic understanding of the use of scaled scores, a way to reduce or even eliminate varying passing scores by assembling tests that are equal in difficulty according to average difficulty (p values), and when using a scaled criterion-referenced passing score, the score required for passing remains consistent.

- A relevant question answered in the FAQ: “We use a criterion-referenced methodology when establishing the passing score for our examination. The result from applying this methodology is that the actual passing score varies from examination to examination. What is the best way to explain the variation?”.

- There is relevant information in the ERAC publication, Development, Administration, Scoring and Reporting of Credentialing/Registration Examinations: Recommendations for Board Members, which discusses the inappropriateness of arbitrary cut points (pp10-11) and use of Equating (p 22).

- Also refer to the resources provided in the previous question in this section, “Why can’t the organization just use 70% as the cut point?"

- This question is answered in the previous Testing and Examinations FAQs section - Test Construction and Assembly.

- RESOURCE BRIEF (2013): Regulators’ Role in Establishing Defensible Credentialing Programs by Steve Nettles, Ed.D. and Larry Fabrey, Ph.D. This resource defines important responsibilities and role for state regulators to ensure the defensibility of the credentialing programs used by their organizations.

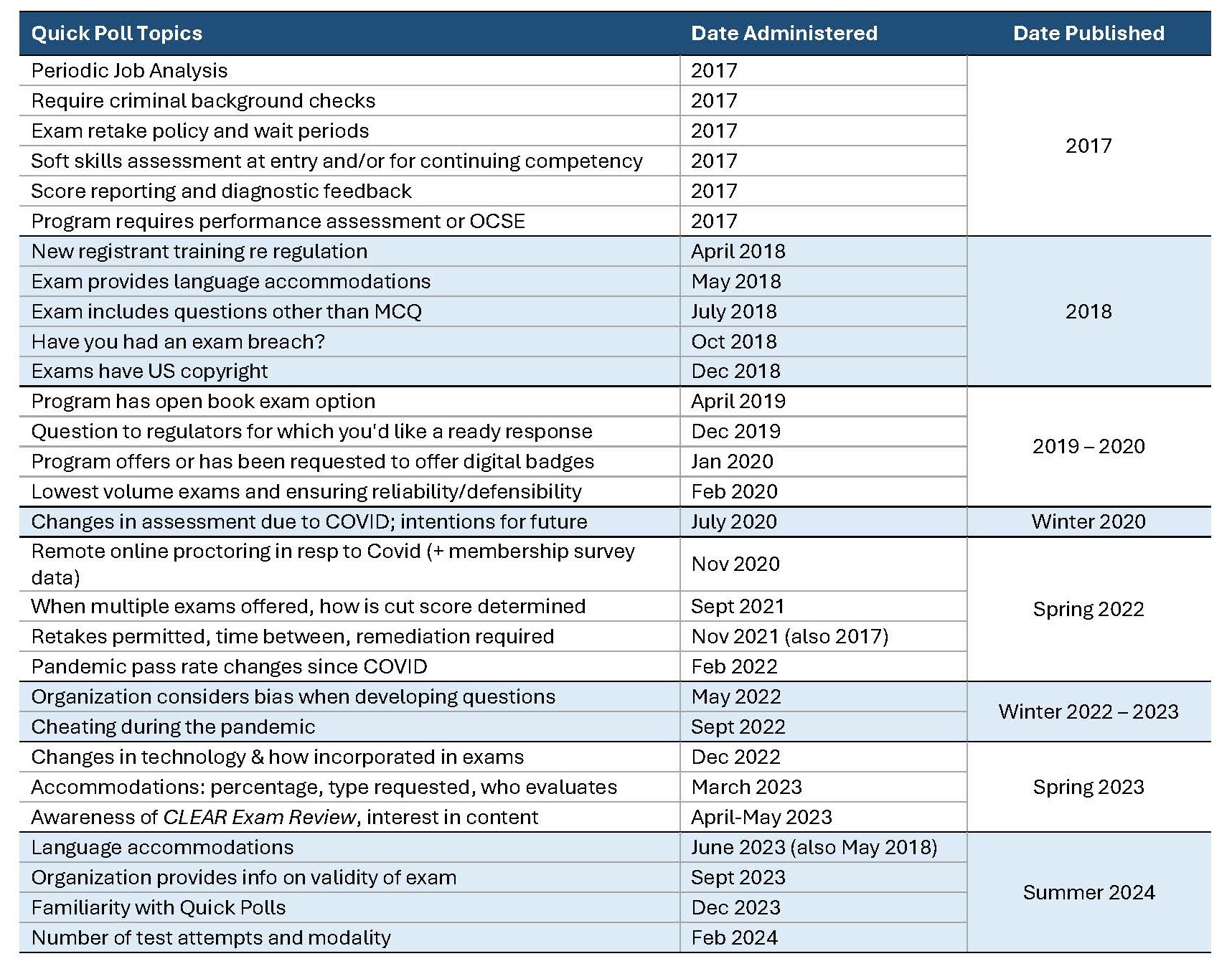

Polls Conducted by CLEAR's Examination and Resources Advisory Committee

Webinar Recordings

Previous webinar topics related to testing and examinations are available for purchase in CLEAR's store.

- Tracking the New Normal – Factors Related to Variations in Pass Rates

- Beyond Data Forensics- How to Deal with Flagged Candidates

- Granted or Denied? Interesting Candidate Complaints and Appeals and How Regulators Responded

- Maximizing SME Engagement- How to Recruit, Manage, and Retain Your Experts

- Increasing the Accuracy of Licensure Decisions: On the benefits of more, shorter exams

- Artificial Intelligence in Regulatory Practice: Opportunities and Considerations

- Alternatives to a Licensure Exam - Oregon State Bar task force

Podcast Episodes

Regulation Matters: a CLEAR conversation - podcast episodes are free to access and listen.

- Episode 74: New Pathways - Alternatives to the Licensure Exam

- Episode 40: Testing Through the Years - a CLEAR Exam Review Retrospective

- Episode 34: Conflicts of Interest with SMEs

- Episode 27: What Makes a Difference for Candidates Taking Computer-based Tests?

- Episode 17: Role of a Content Developer in Licensing Exams

- Episode 15: Working with Subject Matter Experts